Know Your Customers Like Family: The Power of RFM Segmentation with KPMG Case Study

- Abdulazeez Abdullah Temitope

- May 8, 2024

- 9 min read

Updated: Nov 6, 2024

Background Information

01 - Data Quality Assessment

Sprocket Central Pty Ltd, a medium-sized bikes & cycling accessories organisation, has approached Tony Smith (Partner) in KPMG’s Lighthouse & Innovation Team. Sprocket Central Pty Ltd is keen to learn more about KPMG’s expertise in its Analytics, Information & Modelling team.

The client provided KPMG with 3 datasets:

Customer Demographic

Customer Addresses

Transactions data in the past 3 months

You decide to start the preliminary data exploration and identify ways to improve the quality of Sprocket Central Pty Ltd’s data.

Task

Draft an email to the client identifying the data quality issues and strategies to mitigate these issues. Refer to the ‘Data Quality Framework Table’ and resources below for criteria and dimensions which you should consider.

02 - Data insights

Targeting high-value customers based on customer demographics and attributes

Sprocket Central Pty Ltd has given us a new list of 1000 potential customers with their demographics and attributes. However, these customers do not have a prior transaction history with the organisation.

The marketing team at Sprocket Central Pty Ltd is sure that, if correctly analysed, the data would reveal useful customer insights which could help optimise resource allocation for targeted

marketing. Hence, improve performance by focusing on high-value customers.

Task

In building this recommendation, we need to start with a PowerPoint presentation which outlines the approach which we will be taking. The client has agreed on a 3-week scope with the following 3 phases as follows - Data Exploration; Model Development and Interpretation.

03 - Data insights and presentation

The client is happy with the analysis plan and would like us to proceed. After building the model we need to present our results back to the client.

Visualisations such as interactive dashboards often help us highlight key findings and convey our ideas in a more succinct manner. A list of customers or algorithm won’t cut it with the client, we need to support our results with the use of visualisations.

Task

Please develop a dashboard that we can present to the client at our next meeting. Display your data summary and results of the analysis in a dashboard. Specifically, your presentation should specify who Sprocket Central Pty Ltd’s marketing team should be targeting out of the new 1000 customer list as well as the broader market segment to reach out to.

Download data here

Data Quality Issues

Data, which is the lifeblood of informed decision-making, can be compromised by inaccuracies and inconsistencies. Fortunately, Power Query allows us to identify these quality issues with remarkable efficiency. Manual inspection, while tempting, introduces the potential for human error – a well-known phenomenon even in Nigerian physics labs (think error due to parallax!).

Three features that summarize data quality in Power Query include

Column quality

Column profile

Column distribution

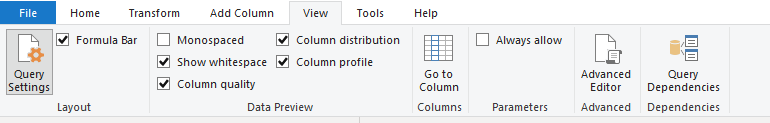

Let’s start with CustomerDemographic. First, we set it to column profiling based on the entire dataset because we are looking for quality issues with the entire data. With column profiling set to the entire dataset, we will get a comprehensive view within seconds.

In the image below, we observe the following within a matter of seconds.

All customer_id is unique, which is expected.

There are no missing values with first_name, but 3% of the data have no last_name. This variable is irrelevant to us for analysis purposes, but this is a quality issue for data storage purposes.

Although gender has no missing values, the value distribution clearly shows the inconsistencies in data entry.

With job_title, because we have 196 different titles, this variable is only suitable for analysis if we can categorize them. For quality issues, 13% of the customers didn’t include a job title.

N.B. — The reason for specifying whether it is needed is for handling missing data. We have identified two variables with missing data. If those two variables are the only ones with missing data, the 1st question you must always ask yourself when dealing with missing data is- Is this variable relevant to my analysis? In our case, No. Hence, I’m not deleting any row or doing any replacement. I’m deleting those variables/columns.

5. Moving onto D.O.B., 2% of the customers have no entry for date of birth; this is an important variable for our analysis. Recall our objective is to identify which customers on the NewCustomerList, the company should target, and to do this, customer analytics go a long way.

Another issue with the D.O.B. is one customer was born in 1893. That may or may not have been an inconsistency, which is worth taking a look at.

With the remaining variables in the CustomerDemographic table, only tenure has missing values with 2% of the data missing.

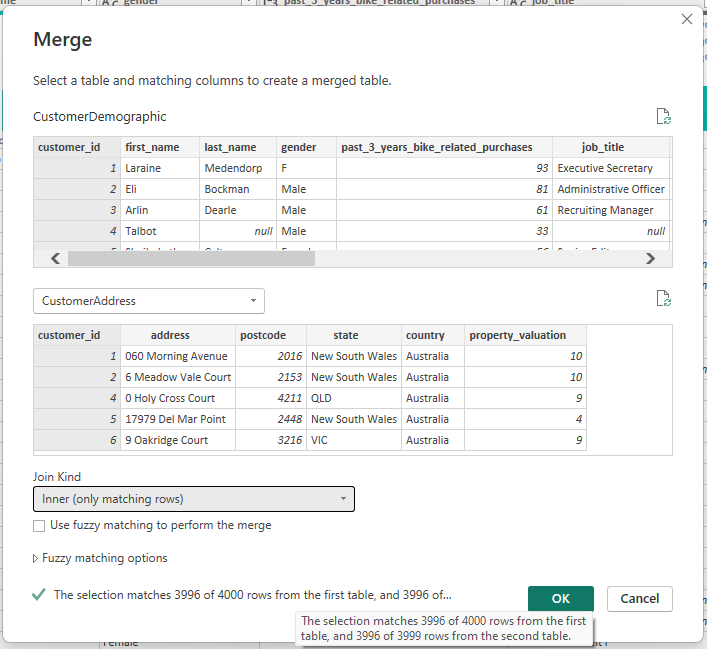

Let’s shift our attention to the CustomerAddress Table. Over here, there is no missing data except the fact that the CustomerDemographic has 4000 customers while this has 3999 customers and the CustomerAddress is supposed to contain the Geographic location of all the customers in the CustomerDemographic table meaning we have an incomplete list.

Likewise, the CustomerAddress table (max customer_id = 4003) has additional customers missing in the CustomerDemographic table (max customer_id = 4000). On trying an inner join, all IDs didn’t match.

We can’t take it a step higher by identifying the mismatch on both tables using a full join.

Only the following set of columns have missing values for the transaction table. There are no other data quality issues in the table.

The last of the data quality issues noticed is that there seem to be some customer id on the transactions table which are not on either the CustomerDemographic or CustomerAddress table.

Likewise, in the CustomerAddress table, there seems to be no uniformity in the state name. For example, NSW & New South Wales, Vic & VictoriaIdentifying the data quality issues is not sufficient.

Identifying these issues is merely the first step. The task of tackling the data quality issue still lies with you.

What is the way forward, or is there no light at the end of this tunnel?

Given the hypothetical nature of this data, we'll choose to delete rows with inconsistencies, recognizing that real-world conditions may need alternate solutions.

Letter: Sprocket Central Pty Ltd Data Quality Assessment

Hello Sprocket,

Thank you for providing KPMG’s Lighthouse & Innovation Team with the three datasets from Sprocket Central Pty Ltd. We appreciate the opportunity to collaborate with you and leverage our expertise in data analytics.

As part of our initial assessment, we conducted a preliminary data exploration to identify potential data quality issues. This process is crucial to ensure the accuracy and reliability of the insights derived from the subsequent analysis.

Our review revealed the following data quality observations:

Missing Values: A limited number of records within the datasets contain missing information in specific fields, such as last name, job title, and date of birth.

Data Inconsistency: Inconsistencies were identified in the representation of certain data points across different datasets. Examples include variations in state abbreviations and data type discrepancies.

Data Discrepancies: Discrepancies were observed between customer IDs present in the transaction and address tables, and those found in the main customer list.

To address these observations and enhance data quality, we propose the following strategies:

Missing Values: Depending on the significance of the missing data point for the intended analysis, we will either remove the affected records or impute missing values based on relevant data patterns.

Data Inconsistency: We will employ data standardization techniques to ensure consistent formatting and representation across all datasets. This includes standardizing state abbreviations and converting data types where necessary.

Data Discrepancies: We will conduct a thorough reconciliation process to identify and rectify inconsistencies between customer IDs across different datasets. This will ensure a unified customer base for analysis.

KPMG's Lighthouse & Innovation Team will continue the data cleaning and transformation process to optimize the datasets for further analysis. Throughout this process, we will keep you informed of any questions or assumptions that may arise.

Following data cleaning, we would welcome the opportunity to schedule a meeting with your data subject matter expert to ensure our interpretations and assumptions regarding the data align with Sprocket Central's understanding.

We are confident that by addressing these data quality issues, we can provide Sprocket Central with robust and actionable insights derived from their customer data.

Thank you for your cooperation. We look forward to a successful collaboration.

Sincerely,

Abdulazeez Abdullah

Data Insights

To understand Customer Behaviour, we will use RFM Analysis. Imagine you run a store, and your customers are like actors on a stage. Some visit all the time, some spend big when they do come, and others seem to have disappeared completely. Wouldn't it be cool to understand why they act the way they do, and even predict when they might show up again?

That's where RFM analysis comes in. It's like a secret code for cracking customer behaviour. RFM stands for Recency, Frequency, and Monetary Value. These three simple things tell you a lot about how your customers interact with your business:

Recency: How recently did a customer buy something? Someone who just bought something is probably more interested in what you have to offer than someone who hasn't bought anything in a long time.

Frequency: How often do they buy stuff? Frequent buyers are like your favourite regulars – they keep coming back for more, which is awesome!

Monetary Value: How much do they spend when they buy something? Big spenders are valuable because they bring in more money for your business.

By looking at these three things together, you can group your customers into different categories. Think of it like sorting your jeans (or other outfits) – some get worn all the time (frequent buyers), some are brand new (new customers), and some might have a hole in them (customers who haven't bought anything lately).

Here's the cool part: you can use these categories to target your customers with special offers and messages that are more likely to interest them. For example, you might send a discount code to someone who hasn't bought anything in a while (a "reviver") or a special birthday offer to someone who buys from you often (a "champion").

RFM analysis can help your organization in multiple ways, some of which are included below:

Find your best customers: These are the people who buy often, recently, and spend a lot. Treat them like royalty! They are Kings and Queens of the business.

Predict what your customers will do: Knowing their habits lets you plan your marketing moves better.

Win back customers who haven't been around lately: Give them a reason to come back!

Talk to each customer in a way that makes sense for them: Not everyone wants the same thing, so don't send the same message to everyone.

By using RFM analysis, you can understand your customers way better. This lets you build stronger relationships with them, which means more sales and a happier business for you! It's like having a spotlight on each customer, but instead of just seeing them, you can see how they interact with your store. Pretty cool, huh?

Let's get started with it. To carry out RFM Analysis, we need a segmentation table. Think of this table as the starting point for our analysis. Download here

First, we need to figure out the last transaction done by each customer. This step involves figuring out when each customer last made a purchase. It's like identifying the last time a Manchester United striker scored, really a piece of work right? We'll use a special DAX formula to achieve this.

Recency tells us how recently a customer made a purchase. Recent scorers, like Salah or Haaland, have a high recency score. We'll calculate this score using another DAX formula.

Frequency tells us how often a customer buys something. Footballers like Mbappe who score multiple goals (frequent transactions) have a high-frequency score. We'll use a DAX formula to calculate this as well.

The monetary value represents how much a customer spends per purchase. Those who buy high-end bikes are like cyclists with expensive gear, contributing a higher monetary value. We'll calculate this score too

Now comes the magic! We'll categorize customers into different segments based on their RFM scores. Think of it like grouping cyclists based on their riding style – some might be "Frequent Flyers" (high frequency), while others could be "Big Spenders" (high monetary value).

We'll create new sections in the RFM table to show individual R, F, and M scores for each customer.

A relationship needs to be created in our model between these two tables

Finally, we'll create two new measures: bike purchases and total revenue, categorized by customer segment. This helps us understand how different customer groups contribute to the overall business.

Revenue

2. Bike purchases

Result Summary

Now, with the intermediary calculations done, we can visualize the results in a clear and visually appealing way. This is like seeing the final premier league table with Arsenal in 2nd position and understanding the trends. We can then analyze these results to tailor marketing strategies and improve customer engagement.

Here's an explainer of the result

In conclusion, RFM analysis provides you with a great tool for better understanding your clients. Segmenting customers based on their recent purchases, purchase frequency, and spending habits allows you to tailor marketing efforts, target high-value customers, and re-engage individuals who haven't connected with your brand recently. RFM analysis enables you to strengthen customer relationships and drive sustainable business success.

Comments